In-house compliance processes are often considered static, complex, and time-consuming. If intelligently anchored, however, the compliance division plays a key role in promoting data-driven innovation effectively and in conformity with statutory regulations.

European companies oriented to technology often labor under the perception that the laws, standards, and rules relating to data protection and security are a barrier to extensive innovation. One commonly expressed opinion is that the strict laws in these areas are causing Europe to fall far behind China and America. The sense is that these regulations block the use of the data that are produced (or could be produced) for data-driven innovation. This is presumably the reason why even today very few data-driven innovations originate in Europe.

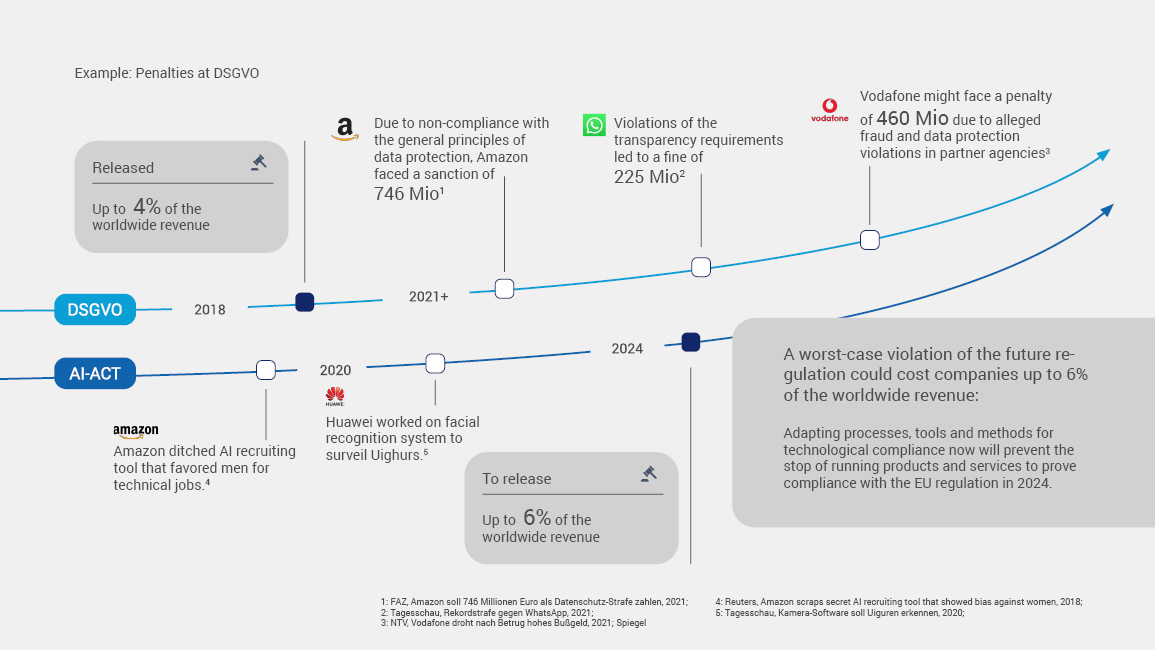

Companies cannot possibly remain competitive without innovations. But failure to give due regard to data protection can have far-reaching consequences for companies or even individuals. The General Data Protection Regulation provides for penalties as high as 4 percent of global annual turnover or €20 million in the event of an infringement. If criminal offenses are committed during the handling of data — such as the unauthorized, deliberate transfer of personal data of a large number of persons to third parties — there is even the threat of imprisonment of a maximum of three years. Although assessed penalties have been rather mild since the entry into force of the Data Protection Act in 2018, this grace period now appears to be over. In the EU, for example, fines of almost one billion euros were imposed in the third quarter of 2021 alone, levied against companies such as Amazon and WhatsApp.

Other technological developments and innovations are now also being regulated by law. The Trustworthy Artificial Intelligence bill, for instance, has been on the table since April 2021; it foresees penalties of up to 6 percent of annual global revenues if infringements are determined.

The implementation of the GDPR in companies’ operating environments represents a massive business risk. A Bitkom study notes that three-quarters of the 502 companies surveyed state that innovation projects have failed owing to specific data protection requirements. In nine out of ten companies, projects have been suspended because of ambiguities about their compliance with the GDPR.

Implementation of regulation poses major challenges

In an analysis, Detecon has identified three problem areas of particular significance in companies that have had to abandon data-driven innovation projects entailing the use of new technologies.

- The impact and requirements of the relevant data protection regulation were not considered in the development from the beginning. If this impact analysis is not carried out until later in the project, it usually leads to costly iteration loops.

- In-house compliance processes are regarded as static, complex, and time-consuming and, moreover, consume substantial resources, in part external, for audits and consulting. In addition, many processes have not yet been adapted to new procedures such as machine learning or data self-services that employees can use to access independently data lakes for the simulation of processes. If these compliance processes are not carried out until the final stages of the development process, the complications for the project are enormous and, in the worst case, lead to its abandonment.

- Some companies prohibit the inclusion of certain technologies such as machine learning or proprietary data as a general principle owing to complexities and ambiguities in interpretation. The inevitable consequence is the limitation of process innovation within the company.

In general, there seems to be a lack of awareness both of laws and regulations and of practical information. What is more, technologies or procedures are even banned holistically owing to the lack of a framework and governance for reliable innovation processes. These factors are considered in proposing the following hypothesis:

Hypothesis:

The compliance division plays a key role in progressing toward data-centric innovation capability. It is a bridge and translator between companies and lawmakers. If intelligently anchored, the support, translation, and assistance it can provide open the door to data-driven innovation.

Providing business departments with a secure framework for the practical application of laws and regulations when working with innovative technologies requires a holistic compliance framework from the outset.

Framework keeps the focus on people and technology

The goal of a compliance framework is to assure the lawful adoption of data-driven technologies without impairing the value of innovation. A fundamental principle of the framework is the avoidance of any liability risks and harm to the company’s reputation.

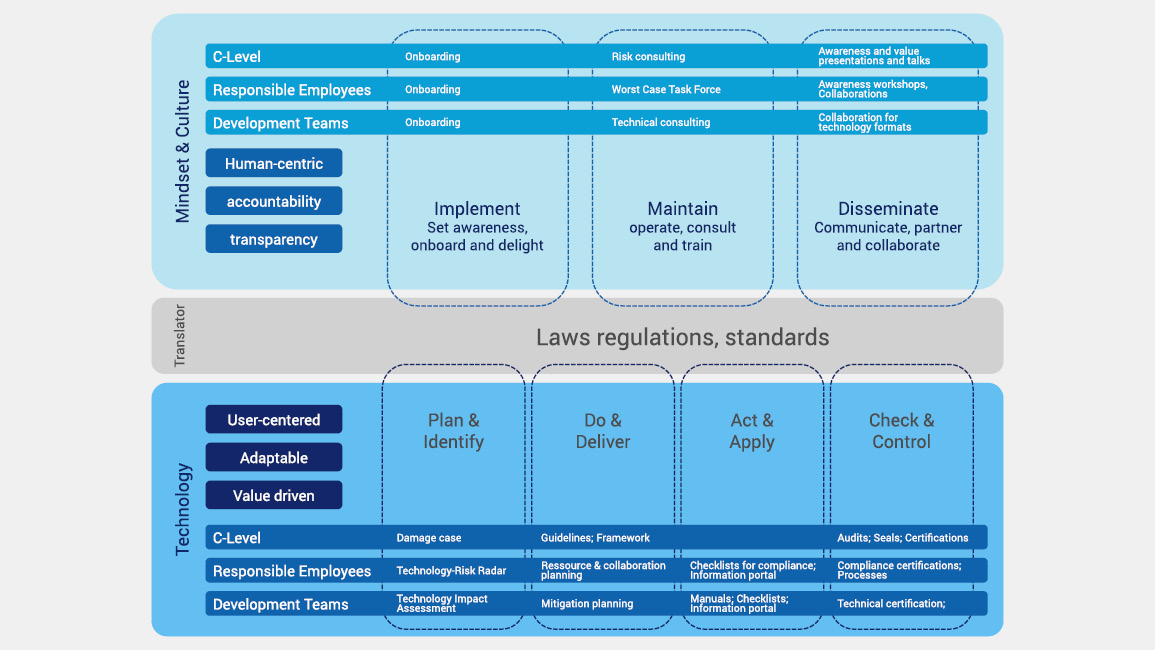

The compliance division assumes the role of translator of laws, regulations, and standards, but goes even further to ensure the company’s commitment to the values it has determined specifically for itself. For instance, more and more companies are developing artificial intelligence guidelines that simultaneously give rise to the need to translate these guidelines into practical procedures.

Structure of a compliance framework

In the compliance framework shown in Figure 2, laws and standards lay the foundation for the levels of “Technology” and “Mindset & Culture” on which the specific initiatives are built.

The “Mindset & Culture” level focuses in particular on imparting and deepening the required knowledge of the pertinent laws and values. Principles such as human-centricity, transparency, and accountability are essential here. Concrete fields of action in this regard are described below.

- Creating awareness (of responsibility) and building knowledge: Developments must remain within the boundaries of the legal framework. This message must be known at every level in the company, and all employees should be aware of their individual responsibility. This prompts many compliance divisions to offer specific onboarding training courses during which new employees are also taught practical basic knowledge about laws right from the commencement of their employment. Once employees have internalized values and regulations, they also have a clear profile in the outside world.

- Availability of actions and supervision of consulting services:

When new technologies are used in a company, questions often arise that can rarely be resolved by the developers alone. Provision of a non-bureaucratic consulting service manned by compliance experts who can quickly clarify any issues is important.

- Communication and partnerships:

Transparency about values and conformity is an important factor for customers when making decisions to buy. Communication campaigns aimed at achieving this transparency can reinforce values and create competitive advantages. Partnerships are helpful for obtaining best practices or establishing a joint strategic position. When new legislation such as the AI Act is proposed, sharing information and experience about early implementation is very valuable. Results from the cooperative actions can even significantly influence legislation.

The “Technology” layer should orchestrate technology adoption so that it is user-centric, customizable, and value-driven. It is characterized by cooperation with the pertinent business departments that manage or use a specific technology.

-

Identification and analysis of potential risks of liability or harm to reputation from new technologies and processes:

Technologies that will play an important strategic role for the company in the future, but that are also subject to significant regulation, can be identified using radar tools or impact maps. For instance, the interplay of social and political influences on a technology may be what causes it to fall under certain regulatory requirements in the first place. One example is the use of artificial intelligence in the HR department. The proposed AI Act would allow the use of the technology solely within truly narrow limits. - Definition of guidelines and principles:

The technologies or practices identified in the first step are now analyzed. In addition, a target vision for dealing with them is defined. This target vision outlines principles that are based on the legal foundation as well as the company’s own values. Many companies on their own initiative set binding guidelines for working with artificial intelligence that often have a broader scope than present (or even future) regulation.

- Structuring user-friendly and practical measures, tools, and methods:

In the next step, the guidelines and principles are translated into practice so that they can be understood at each level of responsibility and in the professional context. The choice of topics — handling data in the context of artificial intelligence, for example — as well as the method that is employed should be developed in collaboration with users to maximize acceptance. During this phase, creative methods such as a chatbot that quickly finds an answer to various questions in lieu of a manual are conceivable. -

Development of review measures:

Finally, tools, measures, processes, and methods are developed to determine whether the previously defined guidelines have actually been implemented in the pertinent technology. One example is an ethical seal that can serve to verify that previously established guidelines for working with artificial intelligence have been followed.

User-centric approach to the legally compliant use of technologies

The sooner laws, regulations, and standards are integrated into the development process, the greater the surety of the product or service will be and the more any tedious rework processes will be minimized. In the best case, a win-win situation is created, one that not only guarantees legal compliance, but also creates added value for users — certificates that can be used online, for instance.

The following steps are essential for a user-centric approach:

- Set the objective and desired impact of the initiatives.

Define the long- and short-term effects you want to achieve with the activities. This ensures that the team and all stakeholders are working toward the same vision. Set expectations for the long-term changes. Define the principles and values that steer your actions. Avoid the danger of focusing first and foremost solely on the legislation or technology in all activities. Actions should be directed above all at the users. Work at peer level with users and develop user-friendly tools, methods, and processes that enrich the user journey.

- Set the technology focus (Plan & Identify).

Start by identifying technologies and procedural errors that could trigger liability issues or cause harm to reputation. While keeping in mind the company’s own strategy, business models, and use cases, perform a structured analysis to determine what impacts are imminent and require directed management.

- Set the framework for action (Do & Deliver).

The next step is to prepare the framework for action to show the different systems or technologies and data categories. For example, the principles of processing set forth in Art. 5 GDPR must be observed whenever personal data are being processed. They include lawfulness, transparency, specification of purpose, accuracy, integrity and confidentiality of processing, and accountability. Depending on the technology, system, or practice, the scope of action can be refined further. A catalog of values drawn up for the specific company can map out the framework for action before ethically oriented artificial intelligence is first used.

- Define the users.

The next step is to identify all stakeholders and users. This involves taking into account any and all persons who will in the future be in charge of developing and operating products and services in compliance with the GDPR and values or who will be responsible for ensuring this compliance. Describe in addition their (personal) needs and interests and the relationships among users. Above all, find the pain points that users have during the integration of legal requirements or regulations. User interviews or surveys can later help to decide in favor of specific initiatives or to prioritize them. Then classify the user groups. Various classifications help to provide the right tools for a group. User groups might be distinguished according to criteria such as different levels of responsibility or their involvement in the different product life cycles of the technology.

- Understand the needs per user group.

Now define behavior according to user group and look into all aspects that could prompt or hamper the users’ behavior (processes, product life cycles, etc.). Consult staff members who have extensive experience and in-depth knowledge of the problems or their context. Don't stay in your silo; instead, focus on user interests. Research and interviews with relevant stakeholders in the data-centric environment often reveal that the implementation of ethical values poses problems because of specific requirements at the level of technological development. Not only are the regulations often not understood. The differences among role models also mean that the individual sense of responsibility for handling data cannot be consciously perceived.

Beyond this, other problem areas are evident:

1. The lack of easy-to-understand and practical information.

2. Understanding whether and to what extent the GDPR or other regulations and their specific requirements for certain systems must be applied.

3. Lack of clarity on how to implement the laws in the relevant phase of the development life cycle.

4. Time-consuming and complicated audits and verification systems shortly before the launch of the system.

- Challenge for the needs of a user group

A tried and proven instrument for clarifying the needs of a user group is the so-called challenge workshop. A challenge is conceptualized to define the goal, scope, and scale of actions. The important point when defining the challenge is ensuring that the project performs the relevant tasks that lead to a successful product. The defined objective helps to maintain focus throughout the project. The visualized challenge also helps with difficult discussions and decisions during the project. Define the challenge as precisely as necessary, but as openly as possible so that there is latitude for the following step of finding a solution.

- Finding solutions: five steps to solve the challenges.

1. Select the user group for which a problem is to be solved subsequently; present the needs and relationships of its members plus their everyday work and processes.

2. Analyze the regulations and find out what they mean in relationship to the users and the technology.

3. Generate ideas on what kind of translation support, tool, or method could help the selected user group to develop and operate the products and services in compliance with the law. Consider the provided information about the users and their positions. Prioritize ideas based on how this initiative fits into the users’ positions and whether a win-win situation is created.

4. Design prototypes as early as possible and test them with the user group. Check that their needs are met and that the users can operate the initiative independently.

5. Prioritize rapid development and a launch for the initiative that can be adapted as easily as possible in further operation and that initially meets basic needs as its primary goal. Always proactively seek feedback and continuously improve processes and content.

Examples of possible action portfolio

Starting from the problem areas described above, you can create a fully comprehensive action portfolio with the user group that simplifies the integration of individual value concepts and the GDPR, increases security for all responsible parties, and is adapted to the users’ working habits. This portfolio may, for example, consist of an internal information website as a “single point of information” offering the latest developments and regulations concerning the law as well as best practices and online training. From here, it would be possible to access all other tools that have been developed specifically for the individual problem areas that have been analyzed.

This might include a manual, for example, in which the framework for action is translated and explained as expedient for practice. It provides information on what the “transparency obligation” referred to in the GDPR means when applied to different systems and data categories. It is valuable for users to have a framework for action and to be able to compare best practices and examples as well as contact experienced advisors for help, especially when the wording of the statutory provisions leaves considerable room for interpretation.

Furthermore, a checklist for all compliance-related processes is helpful as a means of ensuring that the development team correctly uses all tools and methods. Additionally, a risk assessment should identify the (detailed) regulations that must be observed and provide appropriate solution and risk mitigation proposals as well as road maps. A process that queries important actions for each titled risk level and documents them for safekeeping is also essential for the concluding review.

Conclusion

Authorities have begun to utilize the full scope of the penalties provided in the GDPR, which can have serious consequences for companies and individuals. Companies need new concepts for innovative processes and methods that can integrate laws into their operational business if they are to secure their competitiveness because most companies employ complicated, static processes to ensure the legal compliance of their own products or services. Regardless of the approach that is taken, we believe that support during the implementation and translation of laws and regulations empowers users to develop legally compliant data-driven innovations, laying the foundations for business operations that are prepared to meet the challenges of the future.