Digital consistency and thus extended analysis options have a demonstrably positive effect on business. Manufacturing companies that actively work with their data have demonstrable advantages in business success, as the current study "Digitalisation Index for SMEs" shows. Current requirements due to the Internet of Things and networked production can also be better mastered in this way. Interdisciplinary collaboration models such as data thinking and tools such as low code can solve the associated data challenges with reasonable effort.

Unexpected things often happen, as the saying goes: whether supply chains break up in a pandemic, a tanker gets stranded in the Suez Canal or a product suddenly shows signs of damage. In each case, a quick overview of quite a few details is needed. But even planned business strategies require constant monitoring and possible optimisation. The prerequisite in each case is the availability of data with clear meaning from many sources.

Data as a success factor and hurdle

However, data is not immediately a success factor, but initially also a hurdle: Current fields of action such as individualised mass production, which is to cast ongoing customer feedback into many variants, are essentially based on data that is available across systems and has a clear meaning. Requirements such as agile, global partner networks as well as high energy and resource efficiency up to waste-free production also require continuous data along the product life cycle. Typical challenges for the holistic analysis of data then arise, among other things, from the technical integration of information technology (IT), operational technology (OT) and development technology (ET), especially as it is supposed to integrate premises such as data security.

Future manufacturing scenarios, such as so-called cognitive manufacturing with its hyper-networking of all machines, AI-controlled operation, smart optimisation of resources and manufacturing-as-a-service, in which direct customer feedback, for example through sensors in products, triggers manufacturing processes flexibly and promptly, are even more complex. However, many of these consistently high technical challenges can be tackled in a concrete, value-creating way with digital consistency and the aggregation of data into information that this makes possible. On such a basis, there would also be enormous potential for new, information-centric business models that create new customer experiences, increase productivity or accelerate decision-making processes.

Even if the sustainable architecture for digital consistency in data and processes is still missing in many companies - for example, a platform level that connects digital twins, data lakes, analytics and app stores across the company - there are methods and tools that can develop quick successes with measurable results.

Data Thinking as a triad of tried and tested methods

A typical cause for the failure of IIoT projects is still a lack of mutual understanding between the IT department, which is supposed to prepare data for business models, and the business departments, which lack concrete ideas about the possibilities and limits for analytics and artificial intelligence. Since new business models usually represent a triad of innovation, data science and agility, it makes sense to combine the best of design thinking, CRISP-DM (cross-industry standard process for data mining) and agile development into a framework. This "data thinking" process, developed by Detecon, combines the focus on the technical field of action with agile methods for solution design and implementation. It is intended to ensure that data-driven projects can be completed with a higher success rate.

In data thinking, the focus is no longer on technology or data alone, but on the joint development of business models. For this purpose, a project team consisting of an innovation coach, the representatives of the business units, but also data scientists and data engineers, identifies the business need and various solution approaches. Based on various proofs of concept, implementation difficulties are already identified in lab mode and can be weighed against each other before implementation. Typical iterations usually relate to optimising data quality, comparing scenarios and algorithms and extended variables in regressions, decision forests and neural networks.

Low code for corporate app store

A technical challenge of data-driven initiatives continues to be the semantically correct merging of data from a wide variety of internal and external sources and their business-relevant use in analyses and actions. A particularly valuable and pragmatic tool for meeting short-term information needs about operations is Low Code. This development platform enables interested parties to build their own business apps without special IT knowledge and independent of complex data formats.

Like in a Lego construction kit, standard programming blocks can be graphically assembled into function blocks. Apps can then be realised, for example, that orchestrate the optimal settings of a globally used machine type from external sources using crowd intelligence. Or apps that calculate the best maintenance intervals based on predictive maintenance. This principle is taken to the extreme on so-called no-code platforms, where no programming is required and tasks are put together purely logically. So if the factory planner needs a certain piece of information, he can collect it himself using low code or no code in order to simulate and control factory operations in the best possible way.

Practical test in the Industrial IoT Center: OEE and building management

A practical demonstration of the interaction between methods such as data thinking and programming environments such as the low-code platform is shown by Detecon in its Industrial IoT Center in Berlin: there, for example, the improvement of overall equipment effectiveness (OEE) in an ecosystem of digital twins is demonstrated.

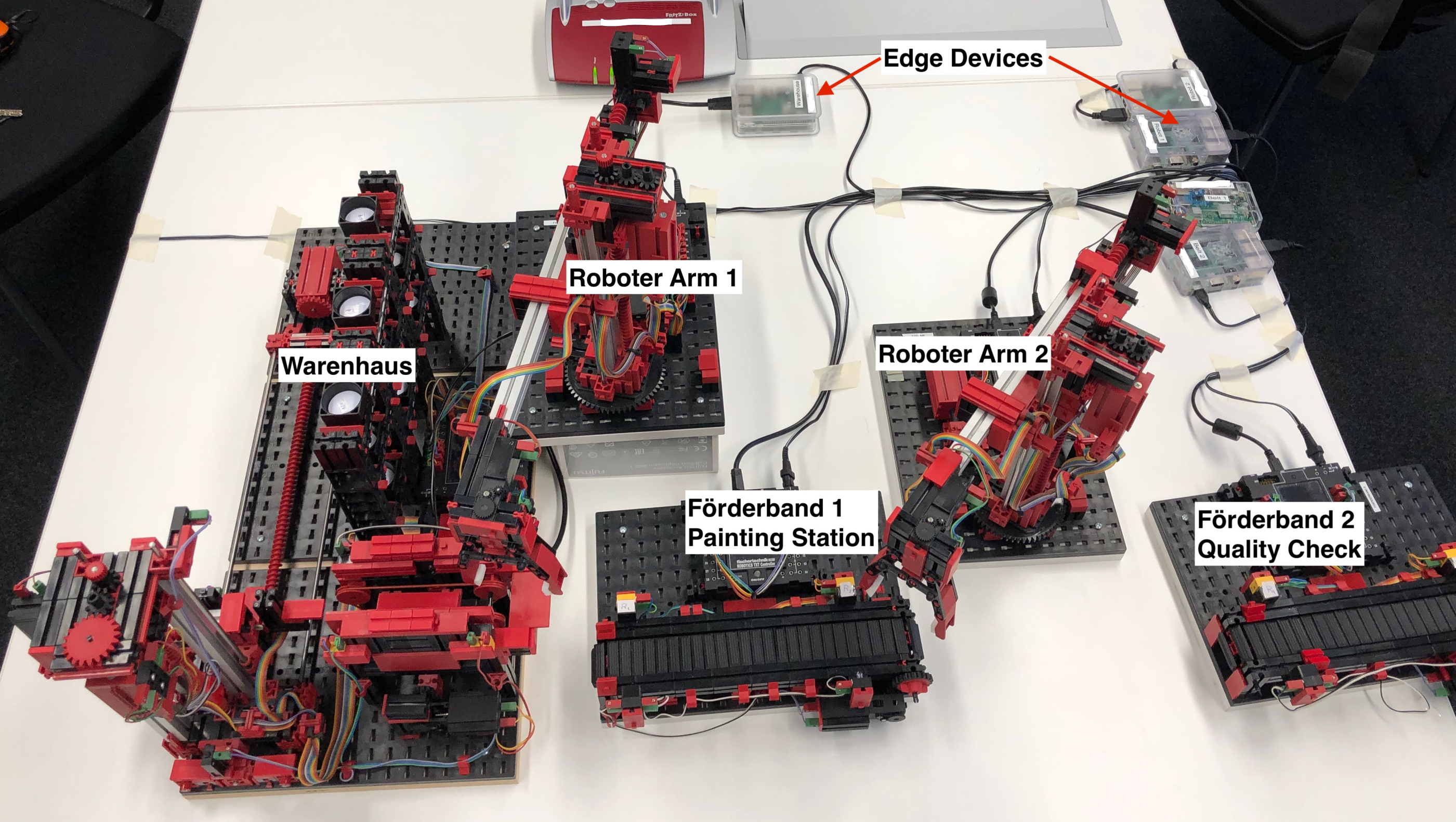

Figure: Practical demonstration of the Industrial IoT Center (Fischertechnik model factory)

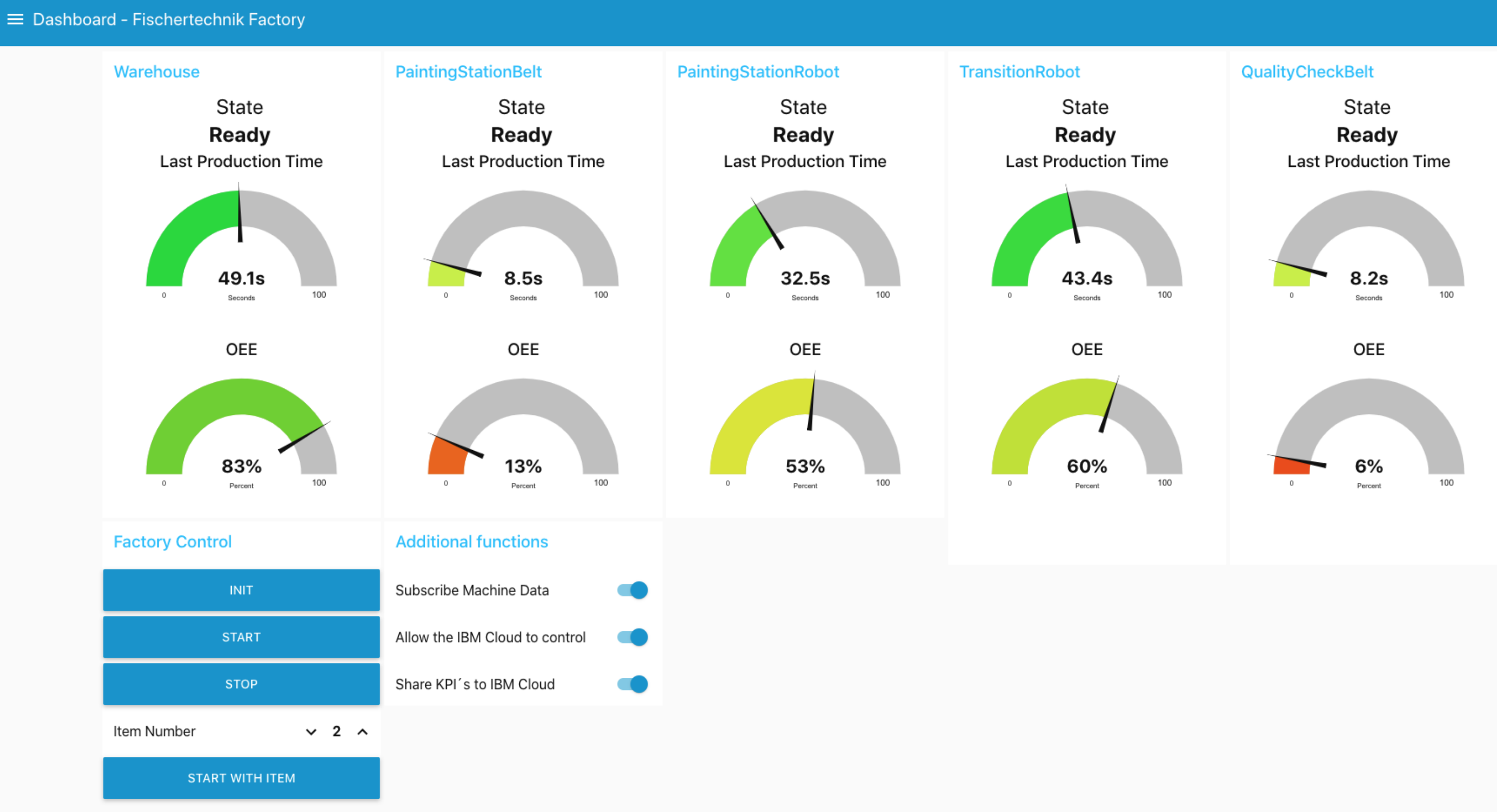

Here, workpieces pass through individual workstations in a Fischertechnik factory and are transported by robot arms and conveyor belts. Each workstation represents an imaginary machine and is equipped with edge devices in the form of Raspberry Pi computers. These in turn are connected to cloud applications via low code. The entire operating process can then be monitored in real time via the cloud. Various KPIs are visualised on a dashboard. In the event of malfunctions, messaging systems prompt action. There is a digital twin (system of systems) for each workstation, but also holistically for the entire factory. The overall system can answer typical questions of a potential planner, for example that 3000 pieces will be produced by tomorrow if line 3 would not have a problem. An OPC Unified Architecture information model, on which the demonstration is based, describes how the respective machine data is to be interpreted.

Figure: Dashboard for Overall Equipment Effectiveness (OEE) realised with Low Code

In another example from the Detecon IIoT Center in Berlin, a demonstration was created for (real estate) building and facility planning. Planning scenarios were transferred from a requirements management system into a hardware simulator using low code. In this way, building parts were virtually installed with sensors and a control logic was created from the information obtained.

Secure development environment

From a governance perspective, low code offers the important advantage that the development environment complies with proven security standards. Technical, factory or quality departments can - without changing or even damaging existing IT systems - independently obtain information and do not burden the capacities of IT developers. The integration of data scientists who can contribute AI code or machine learning algorithms is also possible.

In summary, data thinking and low code open up new potentials, for example to gain monetisable information in short sprints, e.g. within 50 days, to build up new, innovative business services from this and to test them concretely by means of Minimum Valuable Products (MVPs).